In our group, we work at the intersection of Security, AI, and HCI. We study people’s safety concerns, decision-making behaviors, and the role of power dynamics in innovation across the AI development lifecycle, addressing complex privacy and safety challenges in AI as well as leveraging AI’s capabilities to solve long-standing privacy issues. We draw theories and techniques from information and computer sciences (e.g., privacy and security, human-computer interaction, machine learning) as well as social sciences (e.g., behavioral economics). Our research often involves an iterative design process that consists of studying users, building prototypes, designing experiments, and evaluating them empirically. More recently, we are deeply engaged in addressing questions such as: How can we design secure systems that improve the quality of human interaction and human data authenticity in digital ecosystems?

We have the following main lines of research:

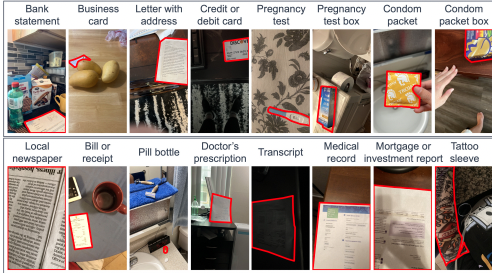

Usable Security and Privacy: We design mechanisms to support people with different backgrounds and characteristics to protect their privacy and security. A large part of this line of work builds solutions for visual privacy by leveraging computer vision techniques.

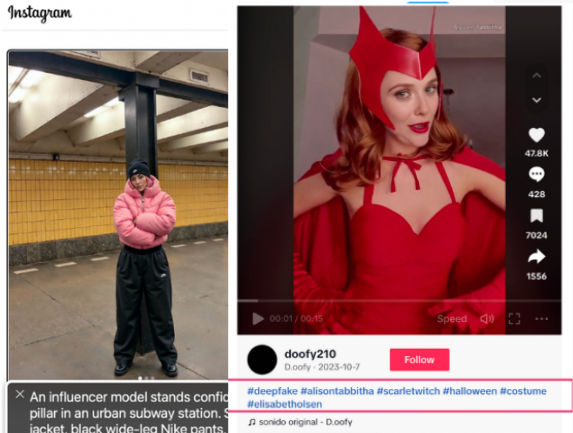

Content Provenance and Identity: This line of work focuses on verifying where digital content (such as AI-generated media) comes from and who created or modified it to maintain trust and safety online. Our work in this area involves designing and evaluating novel solutions for verifying humanness and developing context-aware provenance of content to combat platform abuse, Sybil attacks, and coordinated misinformation campaigns.

Human-Centered AI: We design and develop AI systems that are centered around human needs, values, and behaviors. Our research explores domains such as search engines, social media targeted advertising, AI assistive technologies, and large language model–based applications.

Computational AI Governance: This line of work challenges the top-down governance model controlled by corporations—with limited opportunity for meaningful public input—by developing computational governance mechanisms grounded in participatory, collective intelligence, and decentralized systems.

Prospective Students: We are always looking for PhD students and interns to join our group. Please review the Interested to Join Us page and email me your CV and fill out this form.

This generous support will drive our research on "Designing Accessible Tools for Blind and Low-Vision People to Navigate Deepfake Media"

We are super excited to welcome new PhD students!

AI Indicators and Label Design for AI-Generated Content

Preprint

AI-generated content has become widespread through easy-to-use tools and powerful generative models. However, provenance indicators are often missed, especially when relying on visual cues alone. This study explores how blind and sighted individuals perceive and interpret these signals, uncovering four mental models that shape their judgments about authenticity and authorship in digital media.

Mitigating Voice-based Deception

Accepted in AAAI/ACM Conference on AI, Ethics, and Society

Voice plays a critical role in sectors like finance and government, but is vulnerable to spoofing via synthetic audio. As voice constitutes one of the most ubiquitous modes of human communication, it has inevitably become a major vector for producing large-scale scams and fraud, particularly when voice models are rapidly advancing in both capabilities and public utilization. This work focuses on investigating voice-based deception for people with high exposure to their voice, personally and professionally, with an aim to build socio-technical solutions and mitigate long tailed risks.

Decentralized Decision in AI Governance

Accepted in AAAI/ACM Conference on AI, Ethics, and Society

A major criticism of AI development is the lack of transparency, particularly the insufficient documentation, and traceability in model design, specification, and deployment, leading to adverse outcomes including discrimination, lack of representation, and breaches of legal regulations. This work explore innovative deisgns for collective decision-making platformms. We investigate and employ emerging models such as Decentralized Autonomous Organizations (DAOs) as technical elements that support varied structural concepts from management science and community coordination. Some early works include applying this decision platform for AI governance in critical and sensitive topics.

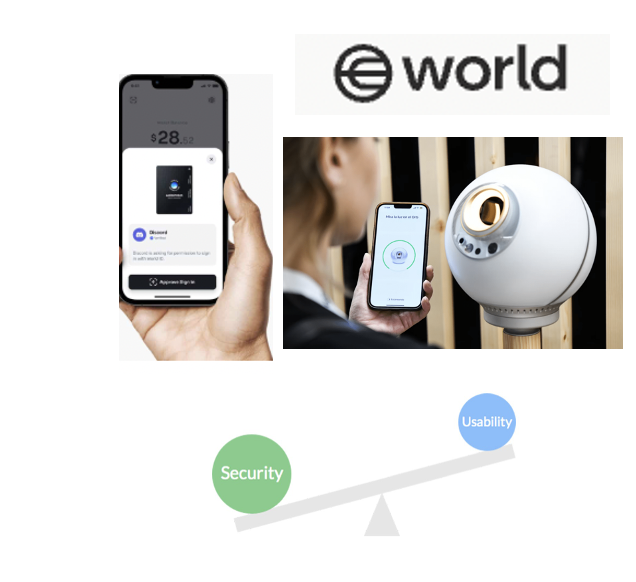

Personhood Credentials Design for Enhanced Human Interaction Verification in Digital Identity Crisis

Usenix PEPR 2025

Personhood credentials (PHCs) enable individuals to verify they are human without revealing unnecessary data. This study investigates user perceptions of PHCs versus traditional identity verification. Participants favored features like periodic biometric checks, time-bound credentials, interactive human checks, and government involvement. We present actionable design recommendations rooted in user expectations.

Visual Privacy Management with Generative AI

Accepted in ACM ASSETS

Blind and low vision (BLV) individuals use Generative AI (GenAI) tools to interpret and manage visual content in their daily lives. While such tools can enhance the accessibility of visual content and so enable greater user independence, they also introduce complex challenges around visual privacy. This work investigates how people use GenAI tools for self-presentation, navigation, and professional tasks. Findings reveal user preferences for privacy-aware design features including on-device processing, redaction tools, and multimodal feedback. We offer guidelines to ensure privacy and empowerment in GenAI systems.

-

Ayae Ide Ph.D. Student

-

Lili Dudas Ph.D. Student (Co-advised with Kelley Cotter)

-

Aljawharah M. Alzahrani Ph.D. Student (Scholarship)

-

Yihao Zhou Masters Student

-

Tory Park Cybersecurity Analytics Undergrad

-

Ryan John Oommen Researcher, IUG Student

We are grateful for the support from:

arXiv

arXiv